OpenCL GPU API User Guide

To take advantage of the increased performance of GPU API, when compared to CPU API, you must first create an equivalent version of your API model in a different format. GPU API models must be written in the *.cl file format, which is the OpenCL file extension. GPU API models must also run in tandem with an existing CPU API model as they are not self-contained.

Version 3.0 of the API is not supported in EDEM 2020. If the OpenCL Error Message "Version of the <API name> plugin is no longer supported" is shown when running the API model on GPU then the API model will have to be updated to use the v3.1 API or later.

As well as the introduction of the OpenCL GPU API the existing CPU API has also been updated, introducing significant changes. The changes to the CPU API have been made so that the inputs and outputs for a user’s API code are largely similar, if not the same, whether their code be written for CPU or GPU.

To convert an existing CPU API (*.cpp /*.h) code to be compatible with the GPU API (*.cl), do the following:

- Update the existing CPU API code (.cpp) to v3.1 of the API.

- Recompile the CPU API to a library (.dll or so).

- Create an OpenCL (.cl) file from the updated CPU API code.

- Create a DataTypes.cl file for transferring information between GPU and CPU.

- Run a simulation with the library file (for CPU) and .cl files (for GPU) in the same location.

Updating to v3.1 of the API from v2.x

The first step a user will need to do is to update their API code to be API v3.1 compatible. Compared to versions 2.x of the API, the externalForce() and calculateForce() functions have been changed significantly, to match the incoming GPU API format. The same inputs are available as in previous versions of the API, but are now available through several structures rather than outright.

For example, the following code assigns a rolling friction value using a v2.6 Contact Model:

variable1 = variable2 * rollingFriction;

whereas to do the same using a v3.1 Contact Model a user would now write:

variable1 = variable2 * interaction.rollingFriction;

To access the simulation time using a v2.6 Particle Body Force, use the following code:

If (time > 5.0)

{

...

}

To access the simulation time using v3.1 Particle Body Force, use the following code:

If (timeStepData.time > 5.0)

{

...

}

A complete list of all these structures and the variables that they hold can be found in the EDEM API documentation. Once you have made these changes, the corresponding *.cpp file that informs EDEM about which API version to use will also need to be updated to reflect the change, for example, for a Particle Body Force:

…

EXPORT_MACRO int GETEFINTERFACEVERSION()

{

static const int INTERFACE_VERSION_MAJOR = 0x03;

static const int INTERFACE_VERSION_MINOR = 0x01;

static const int INTERFACE_VERSION_PATCH = 0x00;

…

where … is used to imply additional code has been omitted for brevity.

As of EDEM 2019.0, or v3.0 of the API interface, two required methods were added for contact model plugins, namely getModelType() and getExecutionChainPosition(). You must specify these to determine the type of physics and where in the contact model chain the model is positioned.

The types of models available are Base (such as Hertz-Mindlin), Optional (such as Heat Transfer) and Rolling Friction. The ApiTypes.h file details the range of options available for both the model type and chain position.

The following examples show how to add these to the custom model header file:

NApi::EPluginModelType getModelType() override;

NApi::EPluginExecutionChainPosition getExecutionChainPosition() override;

And the custom model .cpp file:

NApi::EPluginModelType CHertzMindlin_v3_1_0::getModelType()

{

return EPluginModelType::eBase;

}

NApi::EPluginExecutionChainPosition CHertzMindlin_v3_1_0::getExecutionChainPosition()

{

return EPluginExecutionChainPosition::eBasePos;

}

Only the Contact Model and Particle Body Force versions have been updated to v3.x. Custom Factories remain entirely on the CPU and have not been updated at this time.

Handling Operations

OpenCL is based on the C programming language meaning the *.cl file format does not support some of the advantages C++ offers, that users may be used to. Although there are some minor differences, users can broadly expect any limitations in C (when compared to C++) are also applicable to OpenCL. Basic operations like brackets, division, multiplication, addition and subtraction are supported for doubles.

Operator overloading is not supported in OpenCL, meaning these operations are not readily supported on vector or matrix classes that are common throughout EDEM API models. This means that to perform even relatively simple vector algebra, say multiply a vector by a double, requires multiplying each element of the vector by the double manually. To simplify this process, a range of helper functions have been introduced for the GPU API to replace the overloaded operators that are not available. For example, to multiply a vector by a double in the CPU API, this may take the following form:

CSimple3DVector vectorName;

double d;

CSimple3DVector scaledVector = d * vectorName;

and C++ is handling the multiplication on the vector elements. On GPU this would take the form of:

CVector vectorName;

double d;

CVector scaledVector = vecMultiply(vectorName, double);

where the function vecMultiply() has been used to multiply the vector by a double. Similar functions are available for vector addition/subtraction, dot-product, cross-product, length, and many more. A complete list of available functions can be found in the EDEM API documentation. Any operation that is not a straight forward operation on a double, will need to be replaced with one of the helper functions or else it likely will lead to an error at run time.

Function Names

In addition to the operation changes, the next major difference a *.cl file has when compared to its *.cpp equivalent is in the function names. There are 4 main functions within a *.cl file that must have the model name prefixed to them. The main functions for contact models and particle body force models are the first two, namely contactForce() and externalForce(). The second two are functions that replace the single function configForTimestep, namely configForTimestepParticleProperty() and configForTimestepTriangleProperty(). This means that if you are using the functions:

contactForce()

externalForce()

configForTimestep()

in your CPU API code, then in your corresponding GPU API *.cl file they will take the following form:

ClFilenameCalculateForce()

ClFilenameExternalForce()

ClFilenameConfigForTimestepParticleProperty()

ClFilenameConfigForTimestepTriangleProperty()

where in this case the name of the *.cl file would be ClFilename. Observe the change in case for the function names as well.

Specifying the File Name

In order for EDEM to know what the prefix to these functions will be, you must specify the name of the corresponding *.cl file using the function within the *.cpp file:

getGpuFileName()

i.e. the value that EDEM takes as an input for this function should match:

the name of the *.cl file

the prefix of the 4 functions mentioned above

The inclusion of this function informs EDEM that the API model is compatible with the GPU API.

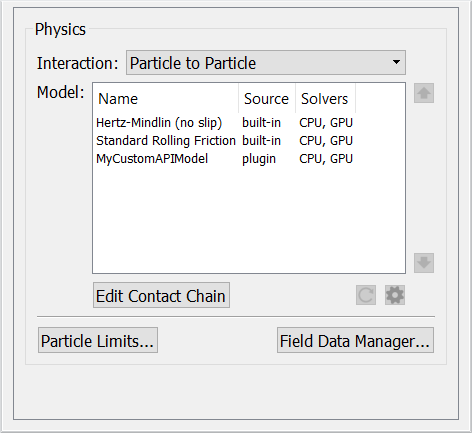

An API model without this function will show up in the EDEM Creator > Physics tab as CPU compatible only, including this function lets EDEM know the model is GPU compatible. The API must have an associated GPU compatible .cl file for the same model to run on GPU. For example, the following MyCustomAPIModel has both the CPU and GPU solvers available as this function has been implemented.

To run the Custom API model on GPU the associated .cl file must also be in the same location as the Library file (MyCustomAPIModel.dll or MyCustomAPIModel.so).

Handling Parameters

If the API model uses a preference file to get parameter values, special attention needs to be given to the handling of these parameters. The OpenCL GPU API does not have a method for directly reading from a preference file. For this reason, the CPU plugin (*.dll file) continues to read the preference file and a series of functions need to be used to pass this data to the GPU API model.

If you are using parameters from a preference file, you will have to handle the parameter data using one of four available structures:

typedef struct

{} SParticleParameterData;

typedef struct

{} SGeometryParameterData;

typedef struct

{} SContactParameterData;

typedef struct

{} SSimulationParameterData;

Each of these four structures has a corresponding ‘get’ function within the API (though there are two to get contactParameterData):

getParticleParameterData()

getGeometryParameterData()

getPartGeomContactParameterData()

getPartPartContactParameterData()

getSimulationParameterData()

If you wish to pass a parameter from a preference file to a OpenCL GPU API model, first it needs to be passed into one of these ‘get functions’. You can find the definitions for these functions in the v3.1 header files (IPluginContactModelV3_1_0.h and IPluginParticleBodyForceV3_1_0.h).

Taking the bonded contact model as an example, this uses a preference file to read in six different values relating to the bonds to be formed. As these are values that are assigned to the simulation, they should be handled by the getSimulationParameterdata() function (they do not relate to a contact and they are not parameters for specific particles/geometries). This means that the start of the *.cl file is as follows:

typedef struct

{} SParticleParameterData;

…

typedef struct

{

double m_requestedBondTime;

int torque_feedback;

int break_compression;

double damping_coeff;

double rotation_coeff;

double m_contactRadiusScale;

} SSimulationParameterData;

In addition to setting up these structs within the *.cl file, the corresponding *.cpp and *.h files also need to be amended to use the getSimulationParameterdata() function. The *.h file will need to include version 3.1 of the contact model API:

#include "IPluginContactModelV3_3_0.h"

and also include the function definition for the getSimulationParameterdata() function:

virtual unsigned int getSimulationParameterData(void* parameterData);

The *.cpp file will then need to implement this function and contain the parameters you wish to pass to the GPU API model:

unsigned int CBonded::getSimulationParameterData(void* parameterData)

{

SSimulationParameterData*params = reinterpret_cast<SSimulationParameterData*> (parameterData);

params->m_requestedBondTime = m_requestedBondTime;

params->torque_feedback = torque_feedback;

params->break_compression = break_compression;

params->damping_coeff = damping_coeff;

params->rotation_coeff = rotation_coeff;

params->m_contactRadiusScale = m_contactRadiusScale;

return sizeof(SSimulationParameterData);

}

The reinterpret_cast() operation is required to change the pointer type of parameterData which is read by the getSimulationParameterData() call from being a void pointer to being of a type that holds all the parameters being passed as shown:

typedef struct

{

double m_requestedBondTime;

int torque_feedback;

int break_compression;

double damping_coeff;

double rotation_coeff;

double m_contactRadiusScale;

} SSimulationParameterData;

unsigned int CBonded::getSimulationParameterData(void* parameterData)

{

SSimulationParameterData* params = reinterpret_cast<SSimulationParameterData*> (parameterData);

…

}

For additional examples of how to handle parameters coming from preference files, see the EEPA and Heat Conduction contact model examples on the EDEM forum.

Handling Custom Properties

Like parameters coming from preference files, custom properties also require their own special treatment for OpenCL GPU API models. The updated *CalculateForce() function contains the following structure for handling custom properties:

void ApiModelCalculateForce(

int contactIndex,

SApiTimeStepData* timestepData,

const SDiscreteElement* element1,

const SDiscreteElement* element2,

SApiCustomProperties* customProperties,

…

Custom properties are not handled by variable name, but rather by their indices. This means the first step is to assign each of the custom properties to an index. The indices assigned to the custom properties in the *.cl file must match those that are already written in the *.cpp file; assigning the indices cannot be done arbitrarily. Using the bonded model again as an example, towards the end of the *.cpp file the custom properties indices are defined in the following manner:

bool CBonded::getDetailsForProperty(…)

{

if (0 == propertyIndex && eContact == category)

{

strcpy(name, BOND_STATUS.c_str());

…

}

if (1 == propertyIndex && eContact == category)

{

strcpy(name, BOND_NORMAL_FORCE.c_str());

…

}

…

Here, the two custom properties BOND_STATUS and BOND_NORMAL_FORCE take on indices 0 and 1, respectively. To setup these custom properties in the corresponding *.cl file:

void calculateForce(…)

{

const int BOND_STATUS_INDEX = 0;

const int BOND_NORMAL_FORCE_INDEX = 1;

…

This step is not mandatory, but it makes it easier to keep track of which index refers to which custom property and will make the overall code easier to manage. Once these indices have been set up, to get a Custom Property Value requires setting up global variables, denoted as __global in OpenCL. To set a custom property Delta requires setting up local variables, denoted __local in OpenCL. The functions getContactPropertyValue() and getContactPropertyDelta() can then be used:

__global const double* m_BondStatus = getContactPropertyValue(customProperties, BOND_STATUS_INDEX);

__local double* m_BondStatusDelta = getContactPropertyDelta(customProperties, BOND_STATUS_INDEX);

This example sets up two pointers, m_BondStatus and m_BondStatusDelta which can now be used to access the value and delta, respectively, of the custom property BOND_STATUS_INDEX.

- The custom property value is defined as a const as it cannot be changed directly but can have its value updated by changing the value of the Delta.

- In EDEM 2019, GPU-API both Custom Property Value and Delta used global variables, in EDEM 2020 the local variable was introduced to improve simulation speed. Using __global for Custom Property Delta in EDEM 2020 results in the message “error: implicit conversion from address space “local” to address space “global 2is not supported in this initialization expression __global double* <custom property name”. The solution for this to change all Delta Custom Properties to use __local.

For additional examples of how to handle custom properties, see the EEPA, Heat Conduction and Relative Wear contact model examples in the EDEM installation folder.

Transferring particle data from GPU-API to CPU-API

When running a CPU-API simulation particle (or other) data can be stored in ‘memory’ and copied between Contact Models, Particle Body Force and Factory so long as all models are compiled into one .dll or .so library file.

When running GPU-API simulations the particle information is not automatically passed back to the CPU. In an occurrence where this is required, for example passing information to a API Factory which runs on CPU only, then the following methods can be used:

- In GPU-API, use the Particle Manager to mark a particle whose information is to be passed to the CPU.

markParticleOfInterest(externalForceParticleManager); - Introduce the equivalent function in the CPU code. As mentioned, it's not required for CPU only plugins, but is needed to match CPU and GPU implementations.

m_particleMngr->markParticleOfInterest(particle.ID); - Process the particle information on CPU.

void CCustomParticleBodyForce::processParticleOfInterest(int threadID, int particleOfInterestId)

{

const NExternalForceTypesV3_0_0::SParticle particle = m_particleMngr->getParticleData(particleOfInterestId);

}

The above example allows you to process the particle information in the Particle Body Force on the CPU, allowing it to then be used in any other CPU code such as a Custom Factory.

Running a Simulation using the GPU API

Once you have followed the steps outlined in the preceding sections, you should be able to run your API model on GPU. To load the model into EDEM, you will need the following three files in the same location:

model.dll

The compiled CPU plugin, i.e. the *.dll file corresponding to the CPU version of the model, written using v3.x or later of the API. This is necessary as not every task relating to the running of the API model can be performed on the GPU. Certain information, for example data coming from a preference file, is cached by EDEM and made available to the GPU.

model.cl

The *.cl file, that should look similar to the v3.x version of the CPU API model only with helper functions used instead of operators and Class methods, and the function names prefixed with the plugin name.

As is the case when running a CPU API simulation, these files need to be in either the same location as the *.dem file of the simulation or in the folder being pointed to in Tools > Options > File Locations. They will appear in EDEM as additional models, just like the CPU case. The way to check whether a simulation is running on CPU or GPU is by looking at the ‘Solvers’ value. If the API model is only capable of running on the CPU this will read ‘CPU’. If the API model is capable of running on the GPU as well then this will now read ‘CPU, GPU’. Determining whether the API model runs on CPU or GPU is determined in the Simulator at run time.

Multiple GPU API Models

As of v3.1 of the API, you can chain GPU API plugins and have multiple plugins within a single EDEM simulation. This was not the case in v3.0 of the API, which only allowed a single GPU API plugin.

Limitations and Restrictions for GPU API

- The configForTimestep() function is split into two functions for GPU API - configForTimeStepParticleProperty() and configForTimeStepTriangleProperty (), which are limited to changing particle and geometry custom properties, respectively.

For example, to reset the heat flux for the heat conduction model:

void configForTimeStepParticleProperty(SApiElementCustomPropertyData* particleProperties)

{

__global const double* flux = getElementPropertyValue(particleProperties, FLUX_INDEX);

__local double* fluxDelta = getElementPropertyDelta(particleProperties, FLUX_INDEX);

*fluxDelta = -*flux;

} - There is currently no support for BOOL custom properties.

- If your model combines a contact model with a particle body force and uses custom properties, the indices of the custom properties should be consistent between the two models. (See the Heat Conduction model as an example).

(c) 2023 Altair Engineering Inc. All Rights Reserved.