[13] Example Applications

Panopticon Streams is installed with a series of example applications:

q AggregationExample – Demonstrates how to aggregate data based on a grouping key and a set of aggregated fields.

Includes simple aggregations such as avg, count, first, last, max, min, samples, sum, sdevp, sdevs, Sum, varp, and vars.

q BranchExample – Demonstrates how to split a stream into one or more branches.

q CalculateRemoveReplaceNull – Demonstrates how to:

· remove and replace fields from output schemas

· set a field value to null

· set a field value to the current timestamp

q CalculationExample – Includes the SquareRoot calculation.

q CalculationsExample – Includes the following calculations:

· Numeric calculations such as Abs, SquareRoot, Subtract, Multiply, Divide, Truncate, IF

· Text calculations such as Upper, Lower, Proper, Left, Right, Mid, Concat, Find

· Time Period calculations such as DateDiff

In addition, data type casting between Text, Number, and Date/Time

q ConflateExample – Demonstrates how to lower the frequency of updates by setting a fixed interval.

q EmailExample – Shows how to send an email via SMTP where the SMPT and email settings can be parameterized. Each record passed to the connector results in an email which can be primarily used as an output for alerting, having a conditional expression that would need to be fulfilled for a record to be forwarded to the output.

Requires the EmailWriter plugin.

q ExternalInputExample – Demonstrates how to directly source data from a Kafka topic (defined in the schema registry with the message format set to Avro).

q ExternalInputJsonParserExample – Demonstrates how to directly use a parsed input Json data.

q ExternalInputXMLParserExample - Demonstrates how to directly use a parsed input XML data.

q FilterExample – Demonstrates how to filter a data source based on a predicate.

q InfluxDBExample - Allows periodical dumping of records from a Kafka topic into an InfluxDB output connector. Requires the InfluxDBWriter plugin.

q JDBCExample – Allows periodical dumping of records from a Kafka topic into a JDBC database output connector. Requires the JDBCWriter plugin.

q JoinExample – Demonstrates how to join a stream to a global table.

q KdbExample - Allows periodical dumping of records from a Kafka topic into a Kx kdb+ output connector. Requires the KdbWriter plugin.

q MetronomeExample – Demonstrates how the metronome operator works in generating a timestamp field schema. A static metronome has a defined frequency while a dynamic metronome takes frequency as an input which determines the speed of the simulation.

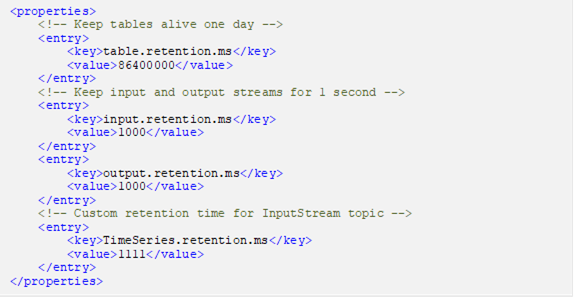

q RetentionTimeExample – Demonstrates how to define the different retention time periods set for tables, input streams, output streams, and topics in an application.

This helps minimize memory utilization and the amount of data retrieved when subscribing from the beginning to the latest messages.

|

NOTE |

Setting these properties in the application level overrides the defaults set in the Streams.properties file.

|

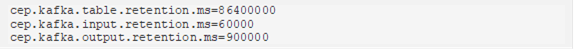

For example, if the following properties are defined in the streams.properties file:

In the application level, the input retention period will be 1,000 milliseconds instead of 60,000 and the output retention period will be 1,000 milliseconds instead of 900,000. Also, a custom topic retention period has been added using the following pattern: TopicName.retention.ms (i.e., TimeSeries.retention.ms).

q StockMarketSimulator – Shows a stock market simulation using a streaming data with join, calculations, and metronome operators.

q StockStaticTimeSeriesApp – Joins a static and a time series data sources using common keys. Also demonstrates adding a sum aggregation.

q StreamtoGlobalTableJoinExample – Joins stream and global table inputs using common keys.

q StreamToTableJoinExample - Joins stream and table inputs using common keys.

q TextExample - Allows periodical dumping of records from a stream Kafka topic into a Text connector. Requires the TextWriter plugin.

q UnionExample- Unioning of two streams.

q WindowedStreamExample – Demonstrates aggregation across a windowed stream.