Multi-GPU Scaling

Simulation Setup

- FZG case (Technical University of Munich)

- Single phase

- dx = 0.3 mm →≈ 50 million particles

- 2180 RPM

- 1 MPI process for each GPU

- OpenMPI CUDA-aware library (shipped with nanoFluidX)

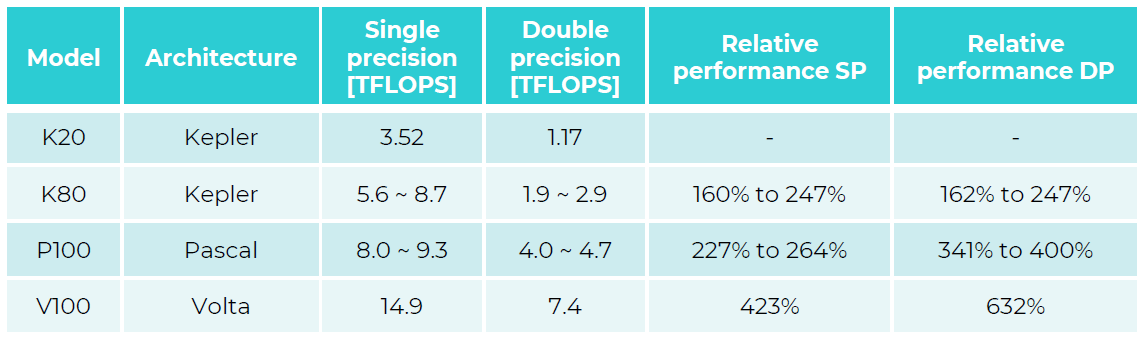

Figure 1. Official performance numbers by NVIDIA

Systems

- K20

- GPU scaling solution

- K80

- Dense GPU solution

- P100+FDR

- Balanced intra-node/inter-node GPU solution

- NVIDIA DGX-2

- Dual socket Intel® Xeon® Platinum 8168 CPU @ 7 0GHz (48 cores total)

Results

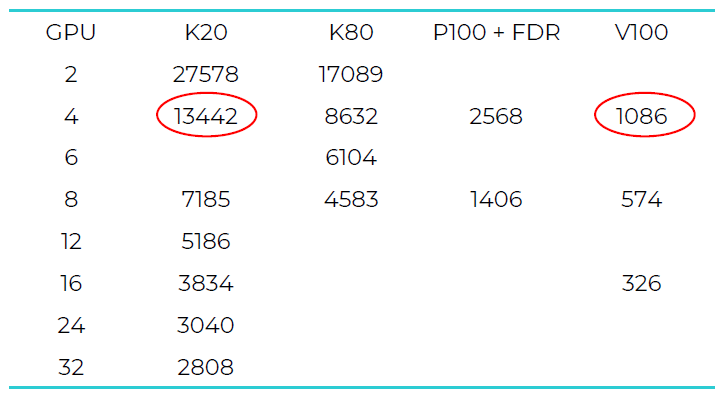

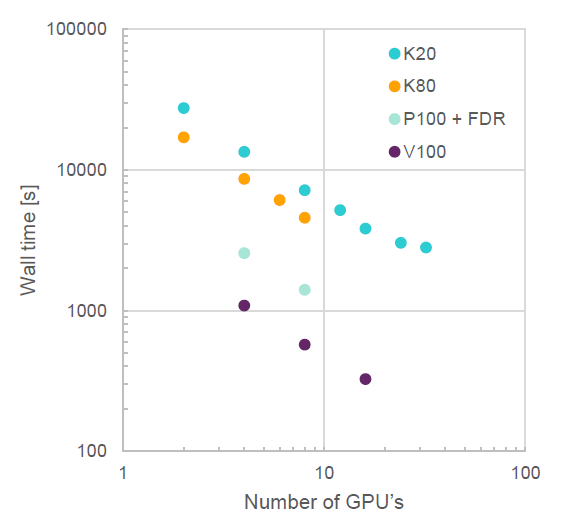

Figure 2. Wall time of the simulation (s). Circled numbers indicate 12.4 times faster performance

Conclusions

Scaling is demonstrated for all four GPU models (K20, K80, P100 and V100), despite having limited number of test points for P100 and V100 cards. Strong scaling efficiency is dependent on the size of the case, implying a sufficient computational load per GPU in order to maintain scaling efficiency. For nanoFluidX, this has been observed to be more than two million particles per GPU.