HS-5010: Reliability Analysis of an Optimum

In this tutorial, you will perform a reliability analysis to determine how sensitive the objective is to small parameter variations around the optimum.

The objective has been minimized to superimpose the computed values to the reference.

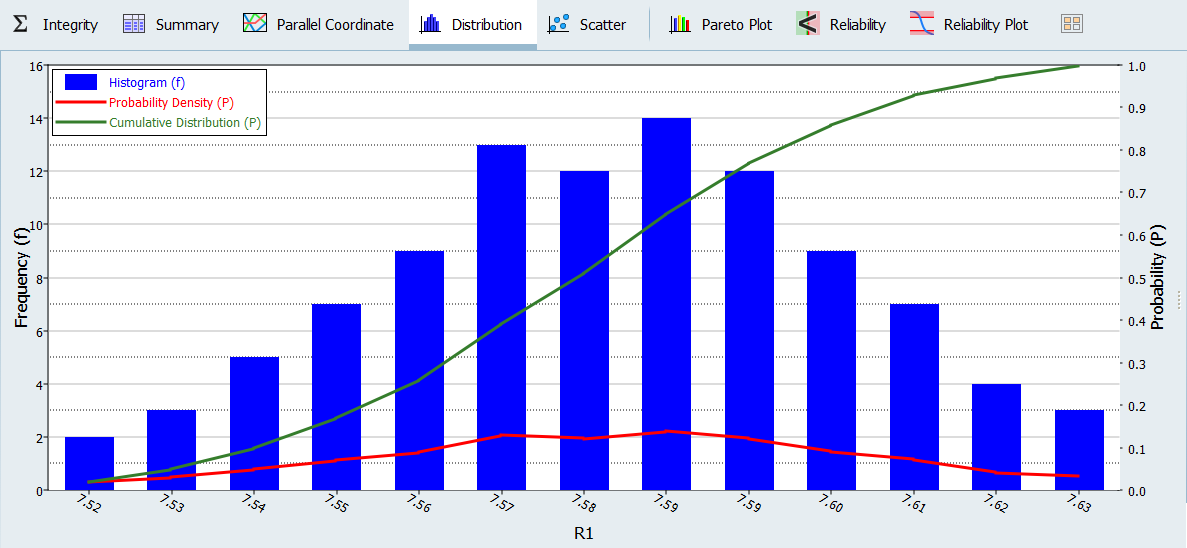

In a Stochastic study, the parameters are considered to be random (uncertain) variables. This means parameters could take random values following a specific distribution (as seen in the normal distribution in Figure 1) around the optimum value (µ). The variations are sampled in the space and the designs are evaluated to gain insight into the response distribution.

Figure 1.

Run Stochastic

In this step, you will check the reliability of the optimal solution found with GRSM. You will use Normal Distribution for the parameter variations and MELS DOE for the space sampling.

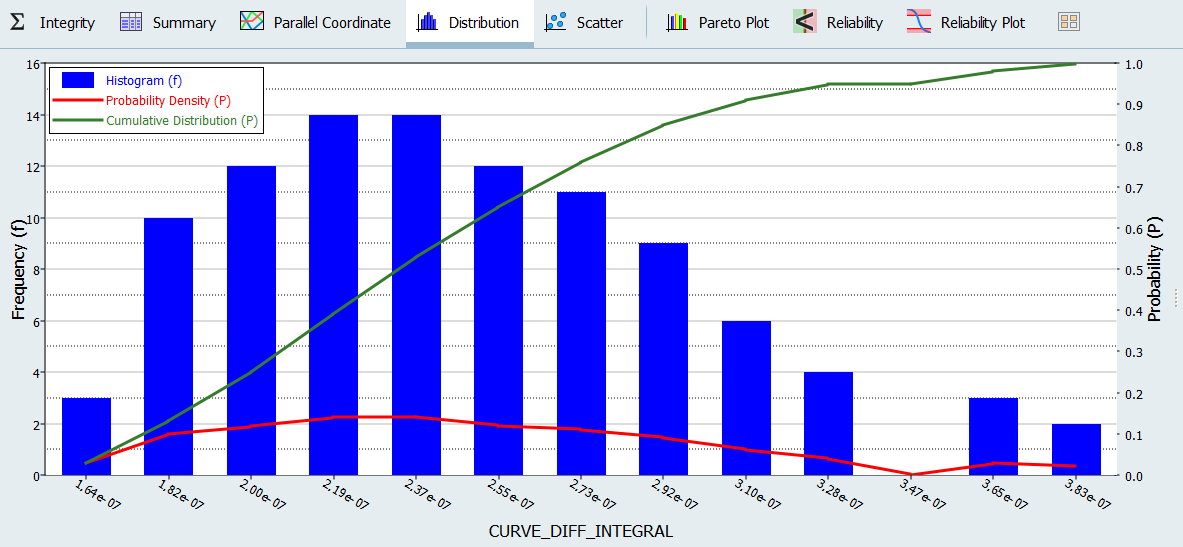

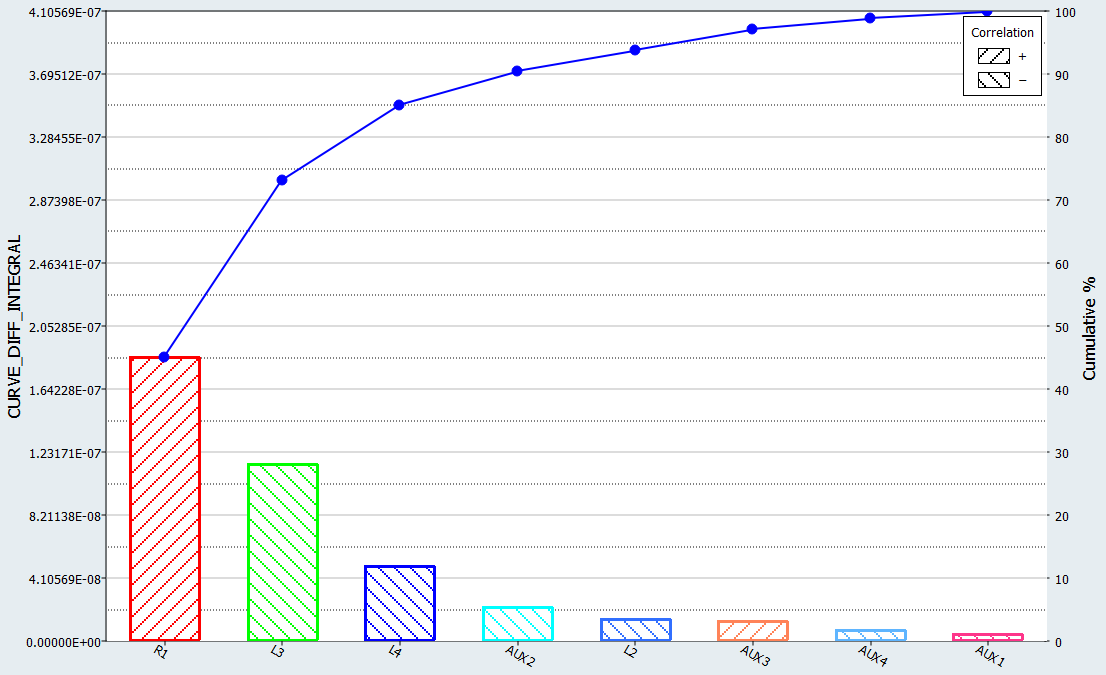

Post-Process Stochastic Results

In this step, you will review the evaluation results within the Post-Processing step.